This is a guest post by Harrison Jones.

Onsite optimization of websites is the most important factor in ranking better in search engine results. You can build all the links you want, but if your website isn’t optimized, Googlebot won’t be able to crawl the site properly. Over the past several years, Google and Bing have released guidelines for webmasters to make websites more crawlable. This is a consolidation of all the guidelines released by Google and Bing over the years to improve onsite optimization. Audits should be performed before the launch of any new website design, and at least twice per year to keep up with changes in industry standards.

Canonicalization and URL Structure

www or non-www, that is the question. Rather than wasting your time rehashing this topic, I have written a handy guide that explains URL structure best practices in full. To summarize the article, there should only be one URL used to get to the homepage of your website. Whether or not you choose to use www is your choice. Just make sure there is only one version, and it’s not followed by an index.php, index.html, etc… URLs should contain words only and no numbers or symbols. The words should be separated by dashes instead of spaces or underscores. All words should contain lower case letters. Each URL should contain a keyword phrase, and should be no longer than 5 words in length overall.

Content Optimization

Every page on the website should have unique, high-quality content using proper optimization techniques. Homepages should have a bare minimum of one paragraph of keyword-rich content. A good rule of thumb would be around three or more paragraphs. Without quality content, Googlebot will have a tough time determining the relevance a page has to the keyword you want to target. The title of the page should be contained within an h1 tag, and should include a keyword – preferably a keyword similar to the page’s title tag. Use plenty of keywords in the body, but avoid keyword-stuffing. There’s no real rule of thumb about keyword stuffing, but if it looks like keyword spam, it probably will be read as spam by Google.

Each page should employ a content hierarchy to establish importance of key phrases. Titles should be in h1 tags and only one per page. Subhead titles should be placed in h2 and h3 tags with a keyword included in it. Bolding and italicizing text within paragraphs will show importance of the phrase to search engines. Linking to other pages on the website using good, keyword-rich anchor text as well as other trusted websites will also increase the value of the content. Every page on a website should have around one hundred links. If a page contains too many links, it will be penalized for being spammy. Non-navigation links on pages should look natural to the content. Follow this content optimization checklist before publishing content to ensure it is properly optimized.

Cloaking or duplicating content is a huge no-no for SEO. Cloaking content is a very risky practice which will get your website heavily penalized by search engines. Matt Cutts recently did a wonderful video explaining how cloaking works and why it’s bad. Duplicate content on pages is another way to get on Google’s black list. Internet leeches love scraping content from websites and reposting it on other websites to collect advertising money. Google grew smart to these practices, and changed the algorithm to filter out duplicate content. Even having two pages on the same site with duplicate content will get your site penalized.

If you absolutely must have duplicate content on your website, which is necessary in rare cases, use the rel=canonical attribute to tell the spiders to only pay attention to one of the two pages. Copyscape and Plagiarisma are two great tools for finding content-scraping leeches that stole your content. BluePay is a credit card processing company that has fallen victim to content scraping. The bottom half of this competitor’s page was completely scraped from BluePay’s homepage. Scary, isn’t it? To check for duplicate content within your own site, do a search for a snippet of your content within quotation marks. This will show you instances where your content appears twice on the website, and may point you to possible bugs on the site. If you do find another website that has scraped your content, you can fill out a request for Google to remove the content.

One last key point to mention about content optimization is advertisements. If your website is oversaturated with advertisements, Google may penalize it. If your website is about GPS tracking for trucks and you have text link ads about online poker, you might get penalized. Avoid creating a mosaic of advertisements on your website. Use ALT text with keywords liberally for all advertisements, links, and images. This will help search engines better understand the content.

Geo Redirects

Geo redirects are a great way for international websites to forward its visitors to the correct language version of the site. Keeping that in mind, it may be the worst SEO mistake of your life. If your site is accessed by going to www.mysite.com and all backlinks point there as well, why would you want it redirecting to www.mysite.com/en/index.html? By automatically redirecting people away from the root domain that all backlinks are pointing to, you will be cutting your link value in half. Create a language selection option on the homepage instead.

Geo redirects are a great way for international websites to forward its visitors to the correct language version of the site. Keeping that in mind, it may be the worst SEO mistake of your life. If your site is accessed by going to www.mysite.com and all backlinks point there as well, why would you want it redirecting to www.mysite.com/en/index.html? By automatically redirecting people away from the root domain that all backlinks are pointing to, you will be cutting your link value in half. Create a language selection option on the homepage instead.

Image Optimization

Image optimization is a commonly overlooked practice, but it does carry a lot of SEO value if done properly. Read my article on image optimization best practices for a full guide on optimizing images. This practice is mainly important for getting images indexed in image searches. Add captions and ALT tags using keywords for each image. ALT tags should be no longer than 50 characters. Filenames should include keywords, and should be separated by dashes.

Internal Linking

Each page should contain around one hundred links per page. You may think that number is outrageously high, but it’s really not hard to achieve. Before getting started, run Xenu Link Sleuth to check for broken links on the site, and fix them. Make sure all internal links are text links. This is especially important with navigational links. Any halfway decent designer will be able to put together some nice text-over-image links with CSS. All category and main pages should be linked throughout the global navigation. Include footer links at the bottom of the page as a secondary form of navigation. This area should link to subcategory, terms of use, social media, privacy statement, sitemap, and contact pages. Be sure to use good anchor text for the subcategory links in the footer. Also be sure to include good links with anchor text throughout the page content. Breadcrumbs are another great form of internal linking, and greatly improve a site’s usability.

Microdata

Microdata is a little-covered topic in relation to SEO, but it does pack a powerful punch. Microdata creates a mode of communication between your content and search engine spiders. You can define the meaning behind sections, elements, and words on each page. This helps build a trust with search engines – especially Bing. I have put together a couple articles on this topic already. If you run an e-commerce site, check out my article on e-commerce microdata. If you run a B2B corporate site, check out my other article about corporate B2B microdata.

Noindex and Nofollow

Depending on the type of website you are building, there may be some pages you specifically do not want indexed or ranked for. Any irrelevant pages like terms of use, PDF files, privacy policy, and sitemaps should be nofollowed. Use your own discretion as to other pages you may want to nofollow. Site search results should also be nofollowed and noindexed. Site search results can be indexed, and will create duplicate content issues if you don’t add the nofollow and noindex attributes to them.

Title and Meta Tags

Title tags should be unique for every page, and contain a primary keyword phrase that is relevant to the page’s content. If you have room for two keyword phrases, separate them with a comma. If the site is a well-known brand, you may want to include the brand name in the title tag. Title tags should be 50-65 characters in length. If the string is longer than 70 characters, it will be cut off in SERPs.

Never use meta keywords. Meta keywords are used by spammers and scrapers all the time, so Bing has decided to flag all pages using meta keywords as spam. Meta descriptions should be included on every page. They should include a keyword phrase similar to the title tag. Descriptions should not exceed 160 characters in length. Never reuse the same description for two pages.

Webmaster Tools

Google and Bing Webmaster Tools have a few tools necessary for SEO. The most important tool is the sitemap submission feature. First, you will need to generate a sitemap for your website. Once you have generated a sitemap, upload it to the home directory of your server and submit it through Google and Bing Webmaster Tools. Sitemap submissions will tell search engines that you have new content, and it should be crawled on a regular basis. Submitting RSS feeds to the sitemap will also carry a lot of SEO value since Google and Bing love RSS feeds. Both webmaster tool suites have an option for doing this.

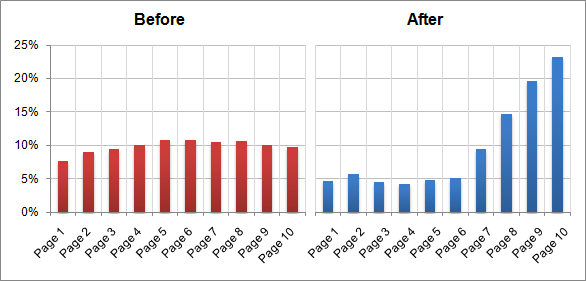

Webmaster tools can also be used to set the crawl rate, check for crawl errors, and to instantly submit the site for indexation. If your content is updated on a daily basis, you may want to change the crawl rate to run Googlebot on a daily basis. Another great tool is the “crawl errors” feature. This will check for errors found while indexing the site. All errors found should be fixed immediately. If you have just launched a new design, site, or just write a very important blog post, you may want to consider using the “Fetch as Googlebot” tool. Bing’s equivalent is called “Submit URLs”. This will instantly index the page you submit and give it a nice bump up in SERPS, but beware – if the click-through rate is very low, that page may get penalized.

Website Architecture

Two or three clicks from the homepage is all it should take to reach any page on a website. Search engines are a lot like human readers when indexing pages. If it takes four clicks to reach a page, Googlebot will place low importance on that page. Likewise, a page reachable from the homepage will carry the most weight. When designing a site, determine the importance you want to place on each page, and create a content hierarchy for those pages when planning out your website. Pages containing your top keywords should be placed towards the top of the hierarchy while long-tail, less important keyword focusing pages should be buried towards the bottom. Now that Google indexes AJAX and JavaScript, there are lots of options to creating a navigation menu where you can implement an effective content hierarchy.

Website Design Mistakes

Three common web design errors that will wreck your website’s crawlability are frame usage, using all-Flash, and not setting up 301 redirects after moving content. Designers are not SEO professionals, so cut them some slack. Although, it is imperative that you review their plans before they implement any changes. If your designer wants to use frames, ask them if they plan on using Comic Sans while they’re at it. Much like Comic Sans, frames are completely outdated from a design and SEO standpoint. Frames require three html files to create the design while normal sites only use one html file. This confuses the heck out of spiders when crawling your site. Most of the time, none of the pages will end up getting indexed.

Flash is an eye-catching way to build a website, but if you plan on building an all-Flash site, then you might as well go sit in the corner, talking to a brick wall because that’s what you would be doing to search engines. Flash cannot be crawled, so don’t implement any site elements in Flash. Flash should be used sparingly, and for decorative purposes only. Another thing to watch out for is whether or not your designers set up 301 redirects after moving around any content. If they did, make sure they used 301’s instead of 302 redirects. If a page has already been indexed and gets moved without a redirect set up, it will come up as a 404 error, which will hurt the indexation of your site.

Website Security

Providing a safe and secure experience to your website’s audience will not only make them feel more confident in your site, but also search engines. If your company owns several domains, make sure none of the content is duplicated across the domains. If you run an e-commerce site, make sure a secure server version of the checkout process is available. Set up robots.txt to block certain pages from being crawled by spiders, but be careful not to block any pages that will hurt your internal linking structure. Check your website to make sure it isn’t infected by malware. Google Webmaster Tools has a free malware diagnostic tool. Web page errors will also cause search engines to mistrust your website. Run your site through the W3C Markup Validator to check for bugs that may cause indexation issues.

Summary

When performing a site audit, it’s helpful to create a checklist of violations to watch out for on the website. You can create one using this article, or you can use SEOmoz’s pre-made site audit checklist. Keep in mind that SEOmoz’s checklist is a few years old and is missing a few elements. Once the audit is complete, you may find it helpful to write up a list of structural recommendations with brief descriptions to help the client understand what you want to do for them.

Harrison Jones is an SEO Analyst at Straight North – a full-service Chicago marketing and web design firm. Harrison writes frequently about link acquisition, SEO, and video marketing. He has been published in several industry publications including Reel SEO and Search Engine Journal. Follow Straight North on Twitter for industry insight and conversations.

Lots of great info here, thank you!

My one question: While I know there are issues with Flash, it’s been my understanding for some time now that Google can crawl flash content to some extent, so I was surprised by your blanket statement “Flash cannot be crawled.” (see http://searchengineland.com/google-now-crawling-and-indexing-flash-content-14299).

I know Flash has issues, and all things being equal, it’s easier to choose something else to avoid them, but I’m wondering if you know something I don’t (but should)?

Thanks for such a fantastic post. I made the comment last night that this is the best SEO/optimization primer I’ve come across, and I’m printing out a copy so I can keep it close by. Thanks again!

which seo theme is best for wordpress blog?

thesis or other seo tem?

Plz suggest one good theme for my blog

Awesome resource, thank you for sharing.

The article didn’t touch on the importance of “social” for websites right now. Can you provide your feedback on that Harrison? Thanks again!

This is a fantastic article. You opened my eyes to many things. Thank you very much!

I have a question to you about Geo redirects. My site is curretly available in English and Hungarian languages. I wanted to add a subdomain for hungarian language (hu.myenglishteacher.eu), but after your post I’m not sure if I should do this or not? Could you please help me?

Loved the post! Got many important factors in it, but can you please elaborate on keyword density? is it important in onsite seo efforts or not?

Also, does Google react the same way to meta keywords as Bing does (considers them spam) or is it ok to use meta keywords in Google?

Thanks again for the post.

All great points. Very useful list. The Geo redirect’s point really drive home a thought I have been having lately. Is it fair that Google and its Algorithm push web designers to make non design decision to enable good SEO practise? A language option is not the best solution for a user who is visiting a multi language website. All real world tech is being designed with the user in mind, phones, entertainment mediums etc. all becoming easier to use. But websites are held back because of a search giant says that usability doesn’t trump SEO.

Editor’s Note: Sorry, is that really your last name? I edited out since it seems to match with your site’s URL, and well, you saw my blog policy, right?

These are essential as a means to working out how well the website is performing at any one time in google. It can also give an idea on where to improve!